Thesis Committee

- Prof. Alex Hauptmann, Carnegie Mellon University (Chair)

- Prof. Alan W Black, Carnegie Mellon University

- Prof. Kris Kitani, Carnegie Mellon University

- Dr. Lu Jiang, Google Research

Document

The write-up can be found here. Slides

The slides [.pdf] can be found here. Abstract

With the advancement in computer vision deep learning, systems now are able

to analyze an unprecedented amount of rich visual information from videos to enable applications such as autonomous driving, socially-aware robot assistant and

public safety monitoring. Deciphering human behaviors to predict their future

paths/trajectories and what they would do from videos is important in these applications. However, human trajectory prediction still remains a challenging task,

as scene semantics and human intent are difficult to model. Many systems do not

provide high-level semantic attributes to reason about pedestrian future. This design hinders prediction performance in video data from diverse domains and unseen scenarios. To enable optimal future human behavioral forecasting, it is crucial

for the system to be able to detect and analyze human activities as well as scene

semantics, passing informative features to the subsequent prediction module for

context understanding.

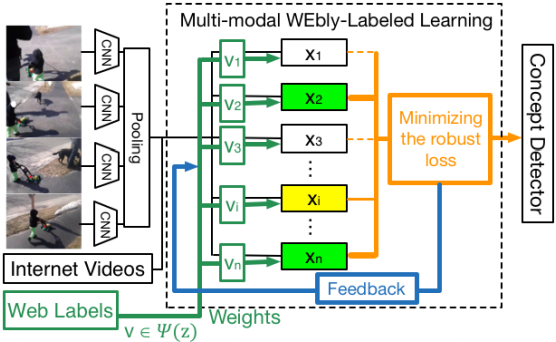

In this thesis, we conduct human action analysis and develop robust algorithm and models for human trajectory prediction in urban traffic scenes. This thesis consists of three parts. The first part analyzes human actions. We aim to develop an efficient object detection and tracking system similar to the perception system used in self-driving, and tackle action recognition problem under weakly-supervised learning settings. We propose a method to learn viewpoint invariant representations for video action recognition and detection with better generalization. In the second part, we tackle the problem of trajectory forecasting with scene semantic understanding. We study multi-modal future trajectory prediction using scene semantics and exploit 3D simulation for robust learning. Finally, in the third part, we explore using both scene semantics and action analysis for prediction of human trajectories. We show our model efficacy on a new challenging long-term trajectory prediction benchmark with multi-view camera data in traffic scenes.